大規模データ解析ツール

データからの情報抽出限界に挑む

データを活用することにより我々がこの世界を、この宇宙をもっとよく理解できるようにしたいと考えています。そのためにはデータから有益で正確な情報を抽出する必要があります。既存の科学技術はそのための十分な術をすでに与えてくれているでしょうか?私の考えではそれはまだ十分ではなく研究の余地が十分にあります。私はこの目的のための研究を行なっています。

因果を見つける

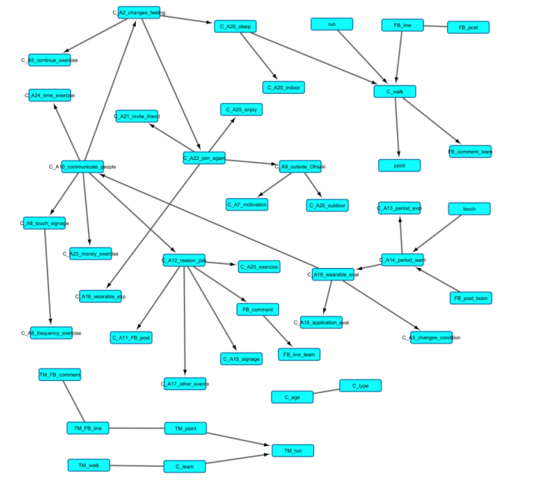

取り組んでいる研究の大きなテーマとして観察データからの因果の推論というものがあります。因果についての統計学的研究は、学術的に非常に難しいタスクであると認識されており、現実にある多種多様なデータへの適用とその活用にもまだかなりの隔たりがあると考えられ、精度そして汎用性ともに高いメソッドやアルゴリズムの研究を実施してきました。

その研究成果を因果分析のソフトウェアとしてツール化し、その実データへの適用を2014年から開始しています。その後CALCプロジェクトとして本社部門とも協力しソニーグループ企業群に展開しています。現在では日米欧におけるエレキ、エンタメ、金融、その他サービスを含む広範なソニーのデータ分析で活用されるようになっており、多くの協力メンバーや賛同者とともにソニーのデータ分析業務の変革に取り組んでいます。この成果を受け、外部へのソフトウェアライセンス販売や受託分析も外部の企業と共同で行ない製造業やサービス業などの様々な分野で利用されています。

この研究の根底にも、データから世界を、宇宙をより理解できるようにしたい、そのために因果関係をデータからもっと識別できる方法の発展が必要だという考えがあります。また、この領域の基礎科学の観点からの興味深い点としてそれが統計学に閉じていないという面があります。科学哲学や物理学とも関係していると考えられ、その方面での考察も進めています。推論メソッド・アルゴリズムや因果情報の活用に関する研究は、未開拓の大きな課題や、実際の活用の過程で新しく見つかる重要な課題もあり、プロジェクト研究員も加わって進めており、データからの推定・推論の限界に挑んでいます。また、他の学問分野への応用も考え、いくつかの大学との共同研究も進めています。

推定の基礎原理を探る

取り組んでいる他の研究の1つには、非常に基本的なのですが、データからの確率や統計モデルの推定に関する考え方について、というテーマがあります。通常確率の推定は、日常の言葉で言えば割合、やや専門的な言葉で言うところの相対頻度、として計算されるということは皆さんの日常生活にも十分馴染んでいるだろうと思います。この相対頻度からなる確率の推定計算は統計学的には尤度という量が最大となるような推定量にするという原理をバックボーンとしています。頻度主義とも言われ統計学では最も基盤となる考え方です。ところが任意のサンプル数で成り立つものではなく、特に少数のサンプルでは信頼性が大きく落ちてしまいます。つまり尤度最大の原理はある種の近似であると考えることができます。そこで筆者は、より汎用的な原理があってもいいのではないかと考えて研究を始め、そこである原理が思い浮かびました。それは物理学における熱力学に基づく考え方であり、自由エネルギー最小原理と呼ぶべきものです。この考え方は2007年に萌芽的研究として発表したあと、精緻化を試みるほか因果の推論メソッドにも活用しています。この原理の統計科学への適用は確率の推定からモデルの選択まで統一的に議論できる可能性がある点でも優れていると考えています。またこの考え方が適用できるのであれば、有限のデータから情報を抽出する世界において、物理学的世界との類似性を議論することにも繋がるため、科学哲学の世界としての深みを持っていると考えています。また、この考え方は実はほぼ同時期に脳科学においても類似し(てはいるが異なっ)た原理が提唱されているほか、AIの大規模言語モデルにおいても私がこの原理に関係して注目している考え方がテクニックとして活用されていることなどもあり、さらに追求する価値が高まっていると考えています。

Projects

Keywords

Related News

Practically effective adjustment variable selection in causal inference

Adjustment Variable Selection for Intervention Estimation Robust to Data Size

同じリサーチエリアの別プロジェクト

Cybernetic Intelligence

人とAIの協動によるストレスフリーなカスタマーサービスの実現へ

ゴールベース資産運用プラットフォーム

人間とAIロボットとの関係性を問いかける体験型インスタレーション